Updated

Podwatch

Please check out the article on Medium about Podwatch!

Podwatch is a error monitoring tool and service used to track warnings and errors emitted by a Kubernetes cluster. This project is open source and was built by myself along with four other developers who assisted in the design and development of the project.

Watcher Service

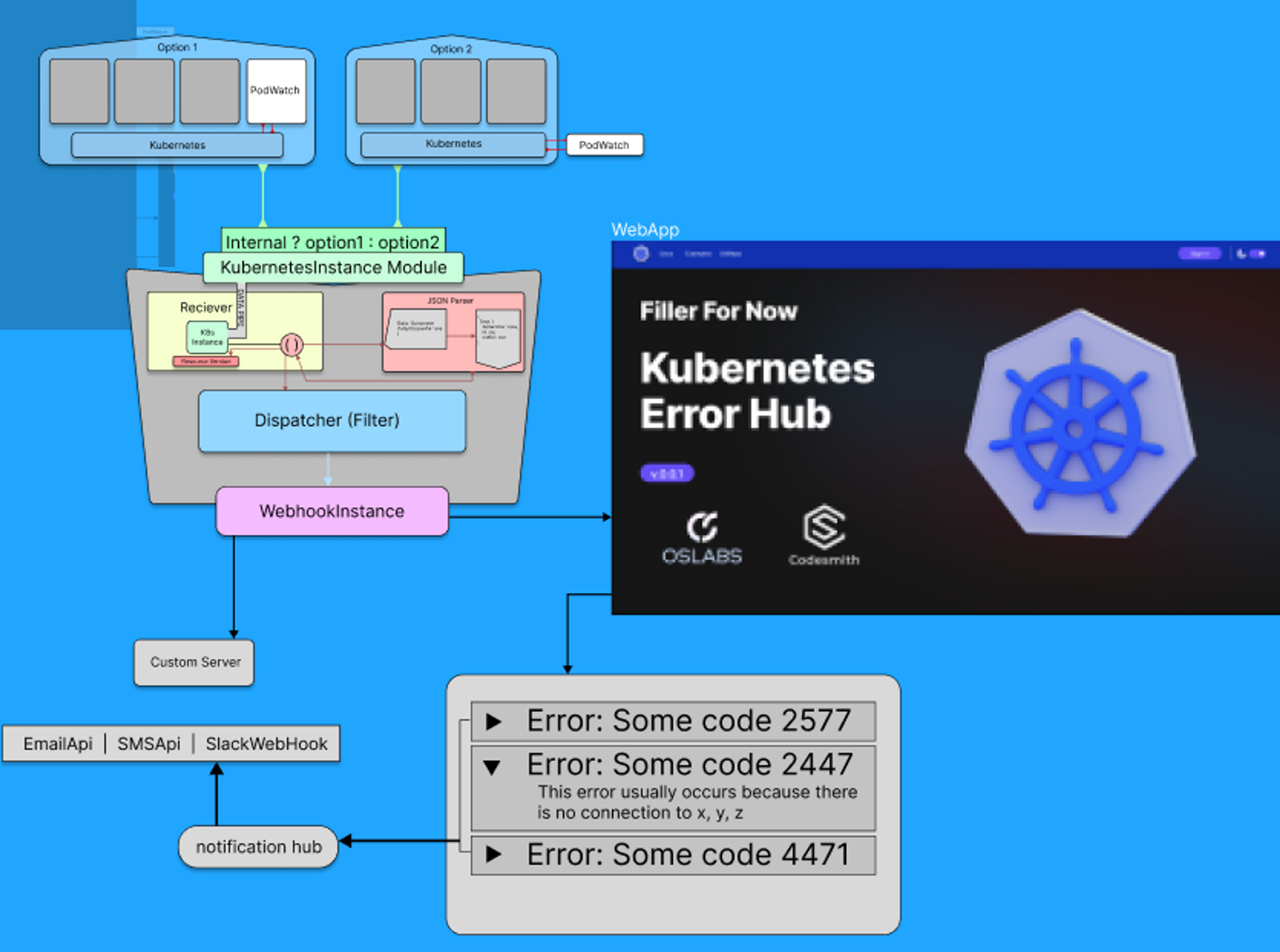

As a team, our first goal was to design the system necessary to accomplish our goal. We realized that we would likely need a service running inside a cluster that could report information to the outside world - something we affectionately began to refer to as the "Watcher" service. This service would have easy access to the Kubernetes API, and more specifically the Events API, using Cluster Roles. Originally, we thought we might also be able to develop an alternative setting for the Watcher Service that would allow it to be run outside the cluster as well - however this idea was ultimately abandoned in favor of completing other aspects of the project.

The Watcher Service would have a simple job - receive information form the cluster Events API about the status of the cluster and its pods, and relay that information outwards as a webhook. We decided that we would allow the user to decide if the information would be sent to our own PodWatch Web Service, or relayed to their own custom server where they could process the information however they see fit.

The Watcher Service was my main area of focus, and I'm proud of how robust I was able to build it while keeping the code simple. I used Joi validation to ensure that all environment variables were valid, I used dependency injection patterns to ensure that the components could all easily be tested with mock interfaces, and I kept the code clean and organized throughout. The most complex aspect was receiving and processing event streams from the Kubernetes API. This data came in JSON format but was chunked, and therefore required setting up custom Transform class for processing the JSON chunks into objects.

/**

* Transforms the stream of data into JSON objects.

* @param chunk A chunk of data from the stream that may contain multiple or partial JSON objects.

* @param encoding

* @param callback A callback to be called when the chunk has been processed.

*/

_transform(chunk: any, encoding: any, callback: any) {

this.buffer += chunk.toString();

let jsonEndIndex = 0;

while ((jsonEndIndex = this.buffer.indexOf('}', jsonEndIndex + 1)) !== -1) {

try {

const jsonObject = JSON.parse(this.buffer.slice(0, jsonEndIndex + 1));

this.buffer = this.buffer.slice(jsonEndIndex + 1);

this.emit('json', jsonObject);

} catch (err) {}

}

callback();

}Additionally, cases had to be handled where the Kubernetes API would close the piped event stream, to ensure that it was always immediately reopened and that streamed events resumed based on the last resource version received.

/**

* Establishes an event stream from the Kubernetes API. The stream will be piped to a JSON parser that will emit JSON objects as they are received. The JSON objects will then be dispatched to the event dispatcher.

*/

private async establishEventStream() {

const params = new URLSearchParams();

params.set('watch', 'true');

if (this.resourceVersion) {

params.set('resourceVersion', this.resourceVersion);

}

const endpoint = `/api/v1/events?${params.toString()}`;

this.logger.log('Establishing event stream from endpoint: ', endpoint);

try {

const response = await this.kubernetesInstance.get(endpoint, {

responseType: 'stream',

});

this.stream = response.data as Readable;

this.stream.pipe(this.jsonStreamParser);

this.jsonStreamParser.on('json', (event: NativeKEvent) =>

this.handleStreamEventReceived(event)

);

this.jsonStreamParser.on('error', (error: any) =>

this.handleStreamError(error)

);

this.jsonStreamParser.on('close', () => this.handleStreamReconnect());

this.logger.log('Event stream established.');

} catch (error) {

this.handleStreamError(error);

}

}

/**

* Restarts the event stream after a delay. If the stream has been restarted too many times in a short period of time, the stream will not be restarted.

* @param delay The delay in milliseconds before the stream will be restarted.

*/

private handleStreamReconnect(delay = 0) {

this.ejectStream();

if (this.shouldRestart()) {

setTimeout(() => {

this.logger.info('Attempting to reconnect to event stream');

this.establishEventStream();

}, delay);

}

}Web Service

The backend on Podwatch is built as a robust Express server with MongoDB as a database for all users, clusters, and error events (which we called KErrors to avoid confusion with Node.js Errors. We also used Passport.js for authentication.

For the web service front end, we decided to use Next.js for its fast server-side rendering and ease of development. We kept the interface fairly simple, and focused on displaying the information in the most useful way possible. Upon signing in, you are presented with a dashboard that allows management of clusters, viewing of error information, and settings.

The front end Next.js server is deployed on Vercel, which as always, is easy to set up and maintain. The back end server is deployed on a DigitalOcean droplet. This deployment was more involved because it required setting up a new Linux machine and preparing it to serve data over HTTPS with an SSL certifcate. The database is hosted on MongoDB Atlas.

Configuration

One of our goals with this project was to keep the service simple enough that it provided a streamlined developer experience with setting up a cluster for monitoring. As such, our documentation is very short, and setting up Podwatch can take as little as five minutes. We made sure to outline the steps for setting everything up in as much detail as possible in both our documentation and Github README.md file.

Podwatch is also set up to work with Helm, although this feature was never fully completed for use in production. Using Helm would enable an even easier setup for developers, by centralizing the Watcher Service configuration. Building Helm Charts, with its own custom YAML templating language, was an interesting experience.

containers:

- name: {{ .Chart.Name }}

image: "{{ .Values.image.repository }}:{{ .Values.image.tag | default .Chart.AppVersion }}"

imagePullPolicy: {{ .Values.image.pullPolicy }}

envFrom:

- configMapRef:

name: {{ include "podwatch.fullname" . }}-config

- secretRef:

name: {{ include "podwatch.fullname" . }}-secret

optional: true

serviceAccountName: {{ .Values.serviceAccount.name }}